Monitoring and evaluation are tools and strategies that help a project know when plans are not working and circumstances have changed.

They give the management the information it needs to make decisions about the project and the necessary changes for strategy or plans. In this sense, monitoring and evaluation are iterative.

Monitoring and Evaluation: Definition, Process, Objectives, Design, Planning, Differences, Methods

What is Monitoring and Evaluation?

Although the term “monitoring and evaluation” tends to get run together as if it is only one thing, monitoring and evaluation are, in fact, two distinct sets of organizational activities.

Monitoring is the periodic assessment of programmed activities to determine whether they proceed as planned.

At the same time, evaluation involves the assessment of the programs towards the achievement of results, milestones, and impact of the outcomes based on the use of performance indicators.

Both activities require dedicated funds, trained personnel, monitoring and evaluation tools, effective data collection and storage facilities, and time for effective inspection visits in the field.

What follows from the discussion is that both monitoring and evaluation are necessary management tools to inform decision-making and demonstrate accountability.

Evaluation is neither a substitute for monitoring nor a substitute for evaluation. Systematically generated monitoring data are essential for a successful evaluation.

Monitoring Definition

Monitoring is the continuous and systematic assessment of project implementation based on targets set and activities planned during the planning phases of the work and the use of inputs, infrastructure, and services by project beneficiaries.

It is about collecting information that will help answer questions about a project, usually about the way it is progressing toward its original goals and how the objectives and approaches may need to be modified.

It provides managers and other stakeholders with continuous feedback on implementation and identifies actual or potential successes and early indications of the progress, problems, or lack thereof as early as possible to facilitate timely adjustments and modifications to project operation as and when necessary.

Monitoring tracks the actual performance against what was planned or expected by collecting and analyzing data on the indicators according to pre-determined standards.

If done properly, it is an invaluable tool for good management and provides a useful base for evaluation. It also identifies strengths and weaknesses in a program.

The performance information generated from monitoring enhances learning from experience and improves decision-making.

It enables you to determine whether the resources available are sufficient and are being well used, whether the capacity you have is sufficient and appropriate, and whether you are doing what you planned to do.

Monitoring information is collected in a planned, organized, and routine way at specific times, for example, daily, monthly, or quarterly.

At some point, this information needs to be collated, brought together, and analyzed so that it can answer questions such as:

- How well are we doing?

- Are we doing the right things?

- What differences are we making?

- Does the approach need to be modified, and if so, how?

Example

This example is drawn from the World Bank Technical paper entitled “Monitoring and Evaluating Urban Development Programs: A Handbook for Program Managers and Researchers” by Michael Bamberger.

The author describes a monitoring study that, by way of rapid survey, was able to determine that the amount of credit in a micro-credit scheme for artisans in Brazil was too small.

The potential beneficiaries were not participating due to the inadequacy of the loan size for their needs. This information was then used to make some important changes in the project.

Bamberger defines it as: “an internal project activity designed to provide constant feedback on the progress of a project, the problems it is facing, and the efficiency with which it is being implemented.”

Evaluation Definition

Evaluation, on the other hand, is a periodic in-depth time-bound analysis that attempts to assess systematically and objectively the relevance, performance, impact, success, or the lack thereof and sustainability of the on-going and completed projects about stated objectives.

It studies the outcome of a project (changes in income, better housing quality, distribution of the benefits between different groups, the cost-effectiveness of the projects as compared with other options, etc.) to inform the design of future projects.

Evaluation relies on data generated through monitoring activities as well as information obtained from other sources such as studies, research, in-depth interviews, focus group discussions, etc.

Project managers undertake interim evaluations during the implementation as the first review of progress, a prognosis of a project’s likely effects, and as a way to identify necessary adjustments in project design.

In essence, evaluation is the comparison of actual project impacts against the agreed-upon strategic plans.

It is essentially undertaken to look at what you set out to do, at what you could accomplish, and how you accomplished it.

Example

Once again, we refer to Bamberger, who describes an evaluation of a co-operative program in El Salvador that determined that the co-operatives improved the lives of the few families involved but did not have a significant impact on overall employment.

Differences between Monitoring and Evaluation

The important differential characteristics of monitoring and evaluation are;

| Monitoring | Evaluation |

| Continuous process | Periodic: at essential milestones, such as the mid-terms of the program implementation; at the end or a substantial period after program conclusion |

| Keeps track; oversight; analyzes and documents progress | In-depth analysis; Compares planned with actual achievements. |

| Focuses on inputs, activities, outputs, implementation processes, continued relevance, likely results at outcome level | Focuses on outputs to inputs; results to cost; processes used to achieve results; overall relevance; impact, and sustainability. |

| Answers what activities were implemented and the results achieved. | Answers why and how results were achieved. Contributes to building theories and models for change. |

| Alerts managers to problems and provides options for corrective measures. | Provides managers with strategy and policy options. |

| Self-assessment by program managers, supervisors, community stakeholders, and donors. | Internal and/external analysis by program managers, supervisors, community stakeholders, donors, and/or external evaluators. |

Formative and Summative Evaluation

The task of evaluation is two-fold: formative and summative.

Formative evaluation is undertaken to improve the strategy, design, and performance or way of functioning of an on-going program/project.

The summative evaluation, on the other hand, is undertaken to make an overall judgment about the effectiveness of a completed project that is no longer functioning, often to ensure accountability.

We usually seek to answer the following questions informative evaluation:

- What are the strengths and weaknesses of the program?

- What is the progress towards achieving the desired outputs and outcomes

- Are the selected indicators pertinent and specific enough to measure the outputs?

- What is happening, that was not expected?

- How are staff and clients interacting?

- What are implementers’ and target groups’ perceptions of the program?

- How are the funds being utilized? How is the external environment affecting the internal operations of the program?

- What new ideas are emerging that can be tried out and tested?

Questions that one asks in the summative evaluation are:

- Did the program work? Did it contribute towards the stated goals and outcomes? Were the desired outputs achieved?

- Was implementation in compliance with funding mandates? Were funds used appropriately for the intended purposes?

- Should the program be continued or terminated? Expanded? Replicated?

Apart from the questions raised above in formative and summative evaluation, more often, we raise the following questions in the evaluation process to gain knowledge and a better understanding of the mechanisms through which the program results have been achieved.

- What is the assumed logic through which it is expected that inputs and activities will produce outputs conducive to bring ultimate change in the status of the target population or situation?

- What types of interventions are successful under what conditions?

- How can outputs/outcomes best be measured?

- What lessons were learned?

- What are policy options available as a result of program activities?

Conventional and Participatory Evaluation

Evaluation can broadly be viewed as being participatory and conventional.

A participatory approach is a broad concept focusing on the involvement of primary and other stakeholders in an undertaking such as program planning, design implementation, monitoring, and evaluation.

This approach differs from what we know as a conventional approach in several ways. The following section adapted from Estrella (J997) is designed to compare these two approaches of evaluation.

Conventional Evaluation

- It aims at making a judgment on the program for accountability purposes rather than empowering program stakeholders.

- It strives for the scientific objectivity of Monitoring and Evaluation findings, thereby distancing the external evaluators from stakeholders.

- It tends to emphasize the need for information on program funding agencies and policymakers rather than program implementers and people affected by the program.

- It focuses on the measurement of success according to predetermined indicators.

Participatory Evaluation

It is a process of individuals and collective learning and capacity development through which people become more aware and conscious of their strengths and weaknesses, their wider social realities, and their visions and perspectives of development outcomes.

This learning process creates conditions conducive to change and action.

- It emphasizes varying degrees of participation from low to high of different types of stakeholders in initiating, defining the parameters for, and conducting Monitoring and Evaluation.

- It is a social process negotiation between people’s different needs, expectations, and worldviews. It is a highly political process that addresses the issues of an entity, power, and social transformation.

- It is a flexible process, continuously evolving and adapting to the program-specific circumstances and needs.

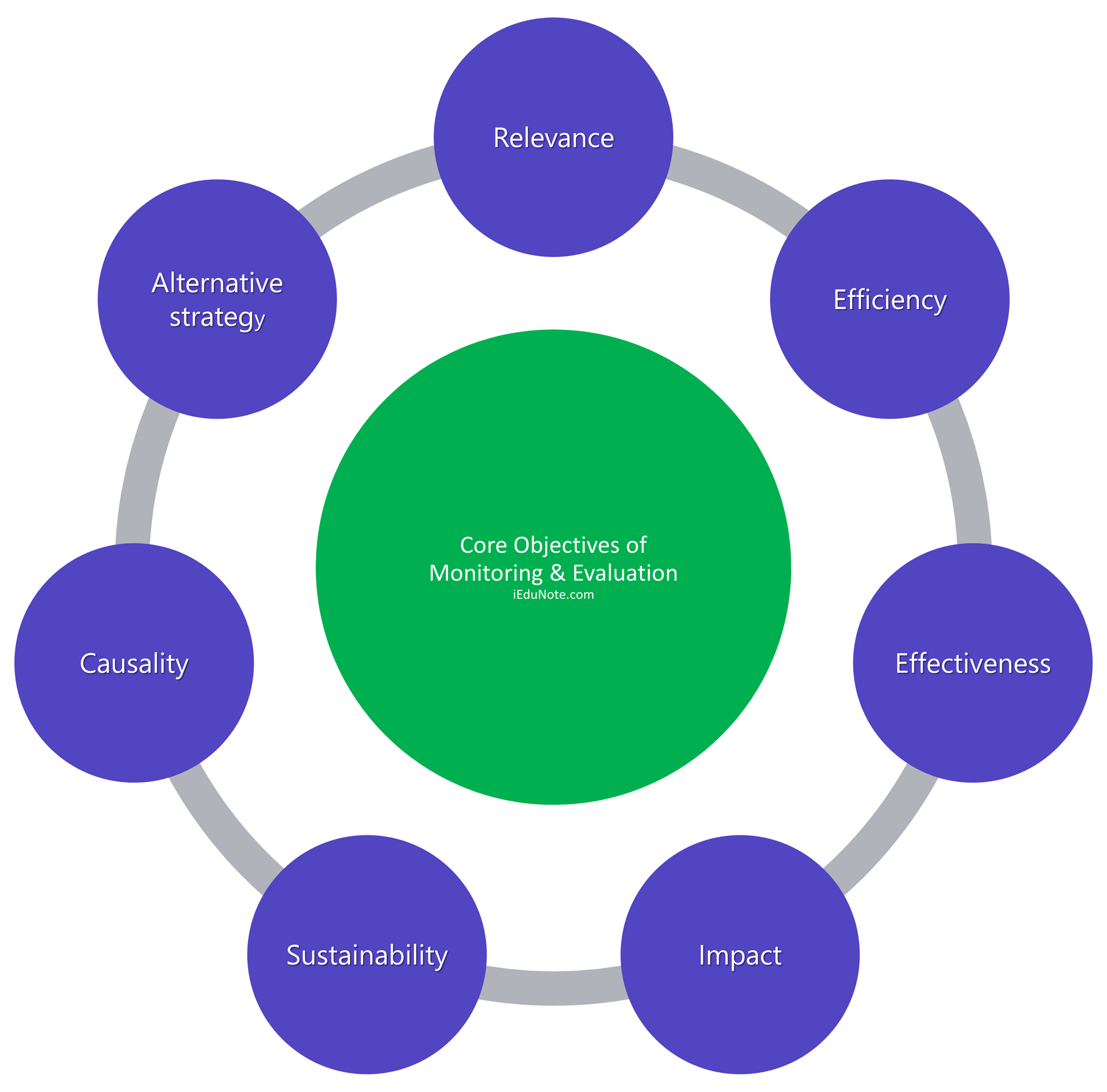

Core Objectives of Monitoring and Evaluation

While monitoring and evaluation are two distinct elements, they are decidedly geared towards learning from what you are doing and how you are doing it by focusing on many essential and common objectives:

- Relevance

- Efficiency

- Effectiveness

- Impact

- Sustainability

- Causality

- Alternative strategy

These elements may be referred to as core objectives of monitoring and evaluation. A systematic diagram is presented in Figure to illustrate the elements of monitoring and evaluation that operate in actual settings.

We present a brief overview of these elements.

Relevance

The term relevance refers to whether the program examines the appropriateness of results to the national needs and priorities of target groups. Some critical questions related to the relevance include:

- Do the program results address the national needs?

- Are they in conformity with the program’s priorities and policies?

- Should the results be adjusted, eliminated, or new ones are added in the light of new needs, priorities, and policies?

Efficiency

Efficiency tells you whether the input into the work is appropriate in terms of the output. It assesses the results obtained with the expenditure incurred and the resources used by the program during a given time.

The analysis focuses on the relationship between the quantity, quality, and timeliness of inputs, including personnel, consultants, travel, training, equipment, and miscellaneous costs, and the quantity, quality, and timeliness of the outputs produced and delivered.

It ascertains whether there was adequate justification for the expenditure incurred and examines whether the resources were spent as economically as possible.

Effectiveness

Effectiveness is a measure of the extent to which a project (or development program) achieves its specific objectives.

If not, the evaluation will identify whether the results should be modified (in the case of a mid-term evaluation) or the program be extended (in the case of a final evaluation) to achieve the stated results.

If, for example, we conducted an intervention study to improve the qualifications of all high school teachers in a particular area, did we succeed?

Impact

Impact tells you whether or not your action made a difference to the problem situation you were trying to address.

In other words, was your strategy useful?

Referring to the above example, we ask: did ensure that teachers were better qualified, improved the results in the final examination of the schools?

Sustainability

Sustainability refers to the durability of program results after the termination of the technical cooperation channeled through the program. Some likely questions raised on this issue are:

- How likely is that the program achievements will be sustained after the withdrawal of external support?

- Do we expect that the involved counterparts are willing and able to continue the program activities on their own?

- Have program activities been integrated into current practices of counterpart institutions and/or the target population?

Causality

An assessment of causality examines the factors that have affected the program results. Some key questions related to causality, among others, are:

- What particular factors or events have modified the program results?

- Are these factors internal or external to the program?

Alternative strategy

Program evaluation may find significant unforeseen positive or negative results of program activities.

Once identified, appropriate action can be taken to enhance or mitigate them for a more significant overall impact. Some questions related to unanticipated results often raised are:

- Were there any unexpected positive or negative results of the program?

- If so, how to address them? Can they be either enhanced or mitigated to achieve the desired output?

Types of Evaluation Methods [For Effective Practice]

There are several ways of doing an evaluation. Some of the more common methods you may have come across are;

- Self-evaluation

- Internal evaluation

- Rapid participatory appraisal

- External evaluation

- Interactive evaluation

Self-evaluation

This involves an organization or project holding up a mirror to itself and assessing how it is doing, as a way of learning and improving practice.

It takes a very self-reflective and honest organization to do this effectively, but it can be a practical learning experience too.

Internal evaluation

This is intended to involve as many people with a direct stake in the work as possible.

This may mean project staff and beneficiaries are working together on the evaluation. If an outsider is called in, he or she is to act as a facilitator of the process, but not as an evaluator.

Rapid participatory appraisal

This is a qualitative way of doing evaluations. It is semi-structured and carried out by an interdisciplinary team over a short time.

It is used as a starting point for understanding a local situation and is a quick, cheap, and useful way to gather information. It involves the use of secondary data review, direct observation, semi-structured interviews, key informants, group discussions, games, diagrams, maps, and calendars.

In an evaluation context, it allows one to get valuable input from those who are supposed to be benefiting from the development work. It is flexible and interactive.

External evaluation

This is an evaluation done by a carefully chosen outsider or outsider team with adequate experience and expertise.

Interactive evaluation

This involves a very active interaction between an outside evaluator or evaluation team and the personnel in an organization or project being evaluated.

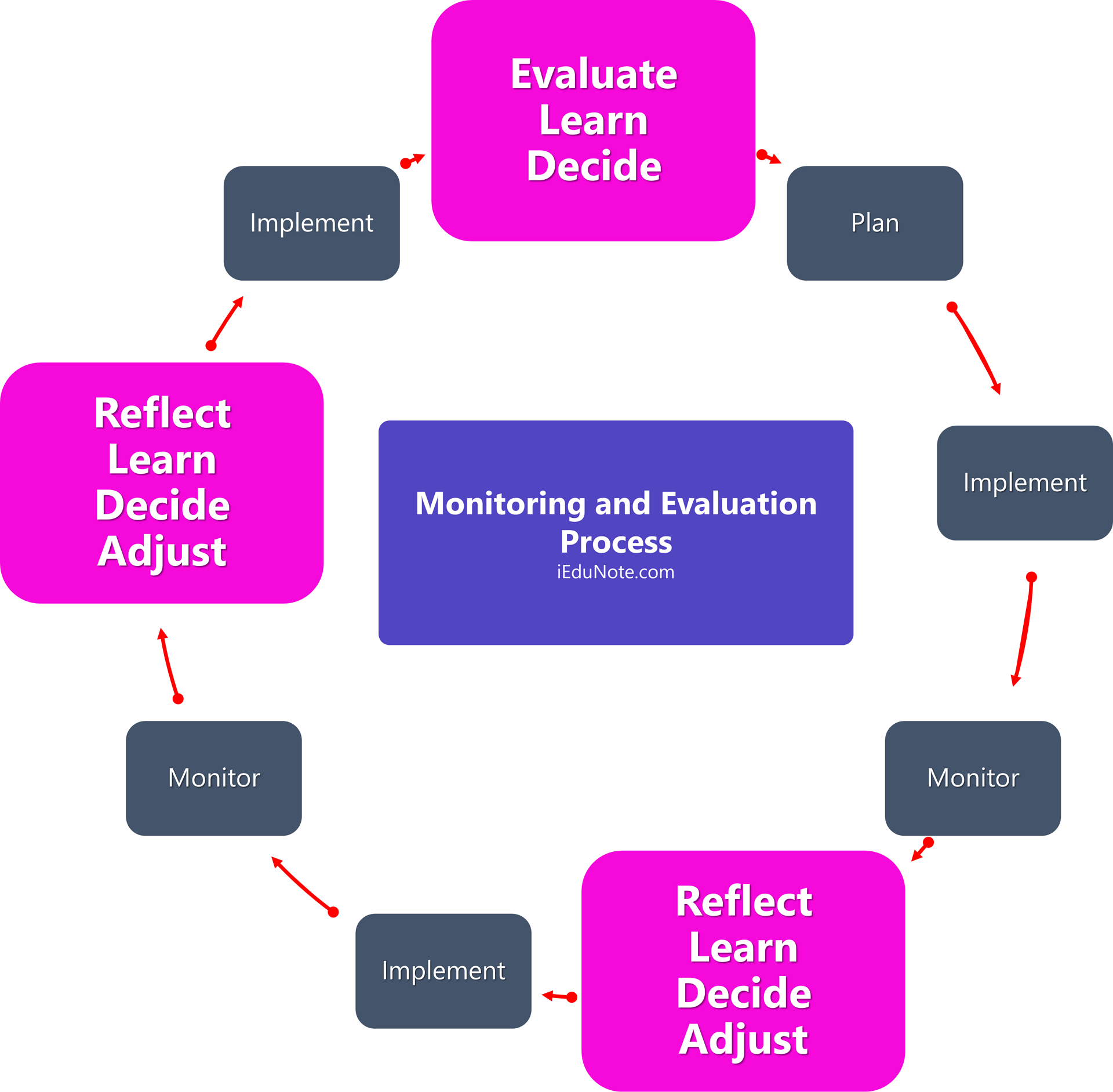

Monitoring and Evaluation Process

The process inherent in the monitoring and evaluation system can be seen in the following schematic flow chart;

Monitoring and evaluation enable us to check the bottom line of development work.

In development work, the term bottom line means whether we are making a difference in the problem or not, while in business, the terms refer to whether we are making a profit or not in doing the business.

In monitoring and evaluation, we do not look for a profit; rather, we want to see whether we are making a difference from what we had earlier. Monitoring and evaluation systems can be an effective way to

- Provide constant feedback on the extent to which the projects are achieving their goals;

- Identify potential problems and their causes at an early stage and suggest possible solutions to problems;

- Monitor the efficiency with which the different components of the project are being implemented and suggest improvement;

- Evaluate the extent to which the project can achieve its general objectives;

- Provide guidelines for the planning of future projects;

- Improve project design and show the need for mid-course corrections;

- Incorporate the views of the stakeholders;

- Show need for mid-course corrections.

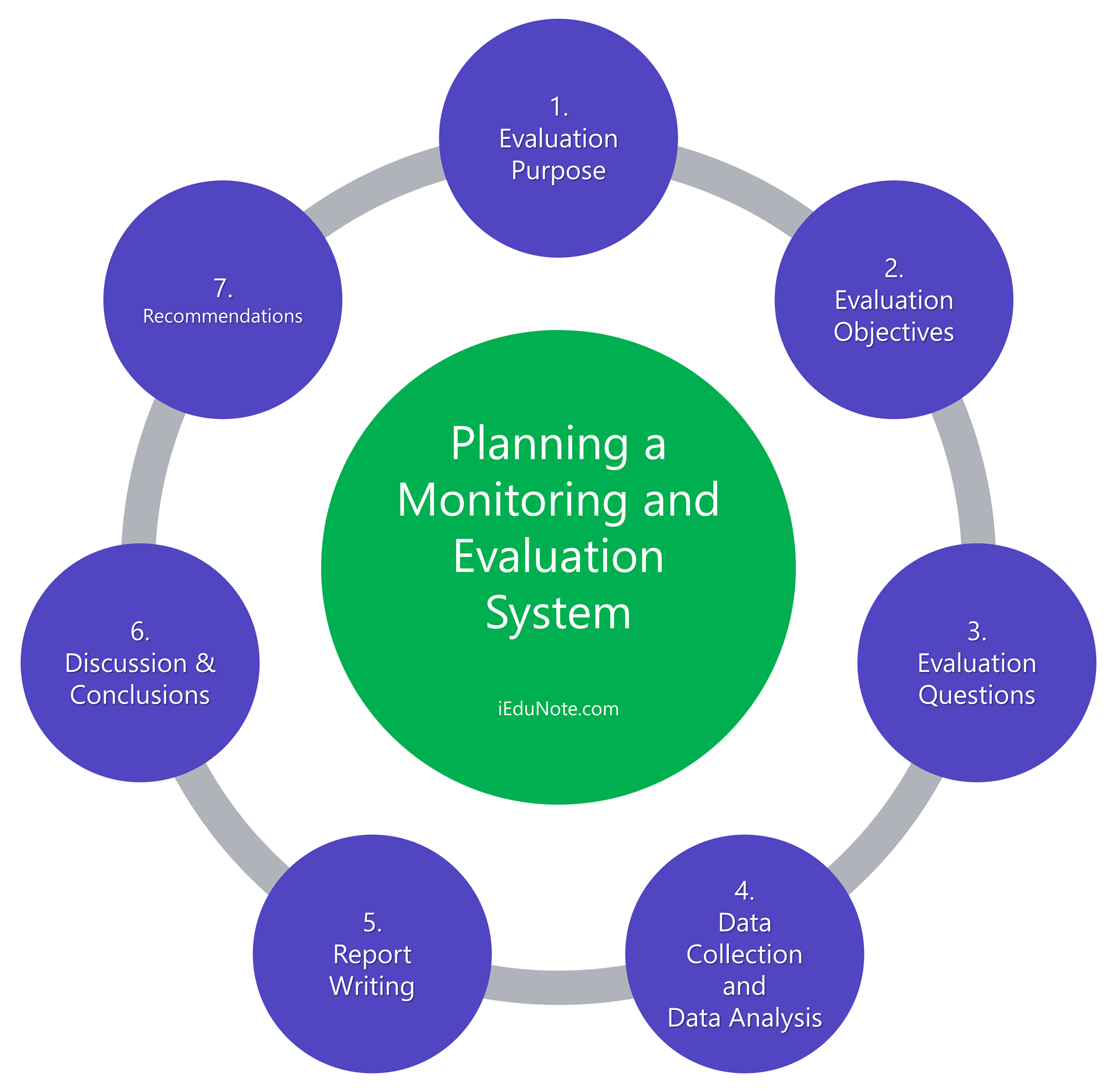

Planning Monitoring and Evaluation System

Monitoring and evaluation are an integral part of the planning process so that timely evaluation results can be made available to decision-makers and ensure that the donors can demonstrate accountability to their stakeholders.

Evaluation results are useful for making adjustments in the ongoing program or for purposes of designing a new program cycle.

Careful planning of evaluations and periodic updating of evaluation plans also facilitates their management and contributes to the quality of evaluation results.

When you do your planning process, you will set indicators. These indicators provide the framework for your monitoring and evaluation system.

In planning evaluation and monitoring activities, we should look at;

- What do we want to know? This question refers to the main objectives of the evaluation and the question it should address.

- Why is the program (project/organization) being evaluated?

- How will be the data for evaluation collected? This refers to the data sources and methods to be used.

- Who will undertake the evaluation task? What expertise is required?

- When will the evaluation be undertaken? This refers to the timing of each evaluation so that their results in each case or combination can be used to make important program-related decisions.

- What is the budgetary provision to implement the evaluation plan?

A logical framework of analysis is a very useful approach in the planning of the monitoring and evaluation processes, which lend itself well in the process.

The following flowchart displays such a framework incorporating all the levels of monitoring and evaluation process.

Designing Monitoring and Evaluation Program

Most evaluations are concerned with the issue of program design. Design issue refers to the factors affecting evaluation results. These factors appear during program implementation.

A good design guides the implementation process to facilitate monitoring of implementation and provides a firm basis for performance evaluation.

Some key questions related to design are;

- Are input and strategies realistic, appropriate, and adequate to achieve the results?

- Are output, outcomes, and impact clearly stated, describing solutions to identified problems and needs?

- Are indicators direct, objective, practical, and adequate (DOPA)? Is the responsibility for tracking them identified?

- Have the external factors and risk factors to the program that could affect implementation been identified and have the assumptions about such risk factors been validated?

- Have the execution, implementation, and evaluation responsibilities been identified?

- Does the program design address the prevailing gender situation? Are the expected gender-related changes adequately described in the outputs? Are the identified gender indicators adequate?

- Does the program include strategies to promote national capacity building?

Proper monitoring and evaluation design during project preparation is a much broader exercise than just the development of indicators.

Good design has five components, viz.

- Clear statements of measurable objectives for the project and its components, for which indicators can be defined;

- A structured set of indicators, covering outputs of goods and services generated by the project and their impact on beneficiaries;

- Provisions for collecting data and managing project records so that the necessary data for indicators are comparable with existing statistics, and are available at a reasonable cost;

- Institutional arrangements for gathering, analyzing and reporting project data, and for investing in capacity building to sustain the monitoring and evaluation services;

- Proposals for how the monitoring and evaluation will be fed back into decision-making.

As there are differences between the design of a monitoring system and that of an evaluation process, we deal with them separately.

Under monitoring, we look at the process an organization could go through to design a monitoring system, and under evaluation, we look at;

- purpose of evaluation,

- key evaluation questions, and

- methodology.

Designing a Monitoring System

In a monitoring system for an organization or a project, the following steps are suggested to consider:

- Organize an initial workshop with staff, facilitated by consultants;

- Generate a list of indicators for each of the three aspects: efficiency, effectiveness, and impact;

- Clarify what variables you are interested in to gather data on these variables;

- Decide the method of collecting the data you need. Also, decide how you are going to manage data;

- Decide how often you will analyze data;

- Analyze data and report.

- Designing an Evaluation System

Designing an evaluation process means being able to develop Terms of Reference (TOR) for such a process so that timely evaluation information is available to inform decision making and ensure that program implementation can demonstrate accountability to its stakeholders.

Evaluation results are important for making adjustments in the ongoing program, or to design a new program cycle.

Careful designing of evaluation and periodic updating of evaluation plans also facilitates their management and contributes to the quality of evaluation results.

In planning and designing an evaluation study, the following issues are usually addressed:

- The purpose of evaluation, including who will use the evaluation findings;

- The main objectives of the evaluation and the questions it should address;

- The sources of data and the methods to be followed in collecting and analyzing data;

- The persons to be involved in evaluation exercise;

- The timing of each evaluation;

- The budget requirement.

We provide a brief overview of the above aspects.

Purpose

The purpose of an evaluation is the reason why we are doing this job. It goes beyond what we want to know why we want to know it.

For example, an evaluation purpose may be: “To assess whether the project under evaluation has its planned impact to decide whether or not to replicate the model elsewhere,” or “To assess the program in terms of its effectiveness, impact on the target group, efficiency, and sustainability to improve its functioning.”

Evaluation questions

The key evaluation questions are the central questions we want the evaluation process to answer. One can seldom answer “yes” or “no” to them.

A useful evaluation question should be thought-provoking, challenging, focused, and capable of raising additional questions.

Here are some examples of key evaluation questions related to an ongoing project:

- Who is currently being benefited from the project, and in what way?

- Do the inputs (money and time) justify the outputs? If so or if not, on what basis is this claim justified?

- What other strategies could improve the efficiency, effectiveness, and impact of the current project?

- What are the lessons that can be learned from the experiences of this project in terms of replicability?

Methodology

The methodology section of the ‘terms of reference’ should provide a broad framework for how the project wants the work of the evaluation done.

Both primary and secondary data sources may be employed to obtain evaluation information.

The primary sources may include such sources as a survey, key informants, and focus group discussions. The secondary sources may be, among others, published reports, datasheets, minutes of the meetings, and the like.

This section might also include some indication of reporting formats:

- Will all reporting be written?

- To whom will the reporting be made?

- Will there be an interim report? Or only a final report?

- What sort of evidence does the project require to back up the evaluator’s opinion?

- Who will be involved in the analysis?

Information collection

This section is concerned with two aspects;

- baselines and damage control, and

- Methods.

By damage control, we mean what one would need to do if he/she failed to get baseline information when the evaluation started.

The collection of baseline information may involve general information about the situation.

Official statistics often serve this purpose. If not, you may need to secure these data by conducting a comprehensive field survey. In doing so, be certain that you collect that information, which will focus on the indicators of impact.

Suppose you decide to use life expectancy as an indicator of the mortality condition of a country.

Several variables might have an impact on life expectancies, such as gender, socioeconomic conditions, religion, environment, sanitation, and the like.

The right choice of the variables will enable you to measure the impact and effectiveness of the programs, thereby improving their efficiency.

Unfortunately, it is not always possible to get the baseline information as desired after you have begun work because the situation over time might have changed, and you failed to collect this information at the beginning of the project.

You may not even have decided what your important indicators were when the project began.

This is what we refer to as damage control.

However, you may get anecdotal information from those who were involved at the beginning, and you can ask participants retrospectively if they remember what the situation was when the project began.

Sometimes the use of control groups is a neat solution to this problem. Control groups are groups of people that have not had input from your project but are very similar to those whom you are working with.

Monitoring and evaluation processes can extensively use random sampling procedures. This ranges from very simple sampling to highly complex sampling.

The usually employed sampling methods are simple random sampling, stratified sampling, systematic sampling, cluster sampling, and multi-stage sampling.

Methods of data collection may also vary widely depending on the purpose and objectives of your program.

Analyzing Information

A vital component of both monitoring and evaluation is information analysis. The analysis is the process of turning the detailed information into an understanding of patterns, trends, and interpretations.

Once you have analyzed the information, the next step is to write up your analysis of the findings in the form of a report as a basis for reaching conclusions and making recommendations.

The following approaches are assumed to be important in the analysis terminating in a report form:

- Determine the key indicators for the monitoring/evaluation;

- Collect information around the indicators;

- Develop a structure for your analysis based on your intuitive understanding of emerging themes and concerns;

- Go through your data, organize it under the perceived themes and concerns;

- Identify patterns, trends, and possible interpretations;

- Write up your findings, conclusions, and recommendations (if any).

What You Should You Know About Monitoring and Evaluation!

What is the purpose of monitoring and evaluation in development work?

Monitoring and evaluation in development work aim to provide constant feedback on whether projects are achieving their goals, identify potential problems early on, suggest solutions, evaluate the project’s overall success, and guide the planning of future projects.

How do monitoring and evaluation differ from each other?

Monitoring is a continuous process that tracks project implementation progress, focusing on inputs, activities, and outputs. On the other hand, evaluation is periodic and offers an in-depth analysis, comparing planned achievements with actual results and evaluating the project’s overall relevance, impact, and sustainability.

Why is it essential to avoid duplicating research topics in monitoring and evaluation?

Avoiding duplication ensures that resources aren’t wasted on previously studied topics. Before undertaking a study, it’s important to review existing research to see if significant questions remain unanswered or if there’s no new perspective to offer.

What are the core objectives of monitoring and evaluation?

The core monitoring and evaluation objectives include relevance, efficiency, effectiveness, impact, sustainability, causality, and exploring alternative strategies.

Can you define monitoring in the context of development projects?

Monitoring is the continuous and systematic assessment of project implementation based on set targets and planned activities. It collects information to answer questions about a project’s progress, identifies strengths and weaknesses, and provides feedback on implementation.

How is evaluation defined in the context of development projects?

Evaluation is a periodic, in-depth analysis that assesses the relevance, performance, impact, success, or lack thereof of ongoing and completed projects concerning stated objectives. It studies the outcomes of a project to inform the design of future projects.