Data analysis and interpretation is the next stage after collecting data from empirical methods. The dividing line between the analysis of data and interpretation is difficult to draw as the two processes are symbolic and merge imperceptibly. Interpretation is inextricably interwoven with analysis.

The analysis is a critical examination of the assembled data. Analysis of data leads to generalization.

Interpretation refers to the analysis of generalizations and results. A generalization involves concluding a whole group or category based on information drawn from particular instances or examples.

Interpretation is a search for the broader meaning of research findings. Analysis of data is to be made regarding the purpose of the study.

Data should be analyzed in light of hypothesis or research questions and organized to yield answers to the research questions.

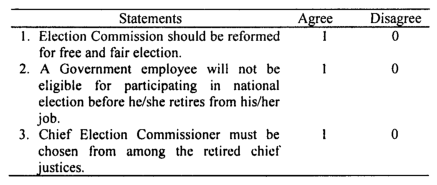

Data analysis can be both descriptive as well as a graphic in presentation. It can be presented in charts, diagrams, and tables.

Data analysis includes various processes, including data classification, coding, tabulation, statistical analysis of data, and inference about causal relations among variables. Using a robust data pipeline platform can further streamline these processes, ensuring that data flows seamlessly from one stage to the next, thereby enhancing data quality and reliability

Proper analysis helps classify and organize unorganized data and gives scientific shape. In addition, it helps study the trends and changes that occur in a particular period.

What is the primary distinction between data analysis and interpretation?

Data analysis is a critical examination of the assembled data, leading to generalization. In contrast, interpretation refers to the analysis of these generalizations and results, searching for the broader meaning of research findings.

3 How is a hypothesis related to research objectives?

A well-formulated, testable research hypothesis is the best expression of a research objective. It is an unproven statement or proposition that can be refuted or supported by empirical data, asserting a possible answer to a research question.

What are the four basic research designs a researcher can use?

The four basic research designs are survey, experiment, secondary data study, and observational study.

What are the steps involved in the processing of interpretation?

The steps include editing the data, coding or converting data to a numerical form, arranging data according to characteristics and attributes, presenting data in tabular form or graphs, and directing the reader to its component, especially striking from the point of view of research questions.

Steps for processing the interpretation

- Firstly, data should be edited. Since all the data collected is irrelevant to the study, irrelevant data should be separated from the relevant ones. Careful editing is essential to avoid possible errors that may distort data analysis and interpretation. But the exclusion of data should be done with an objective view and free from bias and prejudices.

- The next step is coding or converting data to a numerical form and presenting it on the coding matrix. Coding reduces the huge quantity of data to a manageable proportion.

- Thirdly, all data should be arranged according to characteristics and attributes. The data should then be properly classified to become simple and clear.

- Thirdly, data should be presented in tabular form or graphs. But any tabulation of data should be accompanied by comments as to why the particular data finding is important.

- Finally, the researcher should direct the reader to its component, especially striking from the point of view of research questions.

Three key concepts of analysis and interpretation of data

Why is data editing essential in the research process?

Data editing is essential to ensure consistency across respondents, locate omissions, reduce errors in recording, improve legibility, and clarify unclear and inappropriate responses.

What are the three key concepts regarding the analysis and interpretation of data?

The three key concepts are Reliability (referring to consistency), Validity (ensuring the data collected is a true picture of what is being studied), and Representativeness (ensuring the group or situation studied is typical of others).

Reliability

It refers to consistency. In other words, if a method of collecting evidence is reliable, it means that anybody else is using this method, or the same person using it at another time, would come with the same results.

In other words, reliability is concerned with the extent that an experiment can be repeated or how far a given measurement will provide the same results on different occasions.

Validity

It refers to whether the data collected is a true picture of what is being studied. It means that the data collected should be a product of the research method used rather than studied.

Representativeness

This refers to whether the group of people or the situation we are studying are typical’ of others.’

The following conditions should be considered to draw reliable and valid inferences from the data.

- Reliable inference can only be drawn when the statistics are strictly comparable, and data are complete and consistent.’ Thus, to ensure comparability of different situations, the data should be homogenous; data should be complete and adequate, and the data should be appropriate.

- An ideal sample must adequately represent the whole population. Thus, when the number of units is huge, the researcher should choose those samples with the same set of qualities and features as found in the whole data.